Rick's b.log - 2023/03/11

You are 3.149.23.123, pleased to meet you!

Rick's b.log - 2023/03/11 |

|

| It is the 21st of November 2024 You are 3.149.23.123, pleased to meet you! |

|

mailto: blog -at- heyrick -dot- eu

The difference can be... shocking.

Under RISC OS, there are two measurements of importance. The first is the Allocation Unit (also called "LFAU") which determines how many bytes each bit in the disc map represents.

When we had floppy discs, the sector size of the disc was 1024 bytes, and that represented the smallest individual unit that could be assigned to each file. So if you saved twenty files of 1 byte each, they would consume 20K on the disc.

Things are a little more complicated on harddiscs, where the disc map has to be kept to a size that is reasonable to work with. According to Switcher, the Dynamic Areas assigned to the maps of both of my drives are around 960K.

The penalty here, however, is that you need to have a balance between the size of the map, and the ability to address the entire disc surface.

On contemporary RISC OS, idlen is given as 19 bits in length, but there's a terminating bit so it must be counted as 20 bits. This is the size of a map fragment.

For my 8GB µSD card, each bit represents 1024 bytes, so the granularity is 20K. This is to say, a 128 byte file will consume 20K on the disc, and a 23K file will need another fragment (as it's larger than one) so it'll consume 40K.

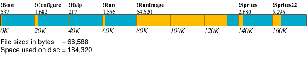

Here is a diagram of the first seven files within the StrongHelp application. These were chosen because every RISC OS application has a set of standard application files, !Boot, !Run, !Sprites blah blah.

In this diagram, light blue represents the disc surface or the number of bytes available, orange represents the number of bytes used by the files, and green (you'll see below) is unallocated space.

As you can see, the amount of bytes used by the files is 66,588 (just over 65K) while the amount consumed on the disc is 184,320 bytes (180K).

My backup media is a 16GB USB key. Since this is twice as large, it stands to reason that the map bits must represent twice as much. And indeed they do. Each map bit now represents 2048 bytes, and thus the granularity is 40K.

Now this isn't a great problem when we're talking of media that's, say, four to sixteen gigabytes in size. Sure, it sucks to lose 10-40K for every single tiny !Boot file, but we're still looking at manageable sizes.

Where things start to come unstuck is when we're looking at current modern sized harddiscs. Personally, I think it's crazy to use massive harddiscs on RISC OS because the filesystem is old and drive repair tools are limited (basically DiscKnight or start examining the source to see how it works), smaller size devices are better in my opinion as you can attach them to a PC to do a full drive image.

What about the sort of half-terabyte drives that exist these days? Easy. Each map bit represents 1,280K and when put into the form of a diagram, it looks like this...

Yes, you read that right. Your ~65K of files consumes 8,960K or 8¾ megabytes. 😱

How to tell the smallest allocation unit size of your media? There are two methods. The first is easy, the second less so.

The easy way - just run DiscKnight with the verbose option. It's always good to run DiscKnight periodically to get on top of small problems before they become big problems.

DiscKnight will tell you the media size of the device, the number of tracks and heads and sectors, which will be garbage because this stuff used to be important when drives were small and you actually addressed media that way (the so-called CHS method). These days, there has to be something there that logically equals the drive size, so you'll see weird amounts of heads and sectors to make it all fit.

As I said, they are weird amounts to make things fit. 29457 (tracks) × 17 (heads) × 31 (sectors) × 512 (bytes per sector) equals 7,948,205,568 bytes which is the size of the media.

Looking down, it's right there. Minimum object size.

The harder way is to just ask and do some simple calculations.

This will read the media description for drive

Now that you have some data in the buffer, time to fiddle with it. The size of idlen is at offset +5, and the log2 value of bytes per map bit is at offset +6. Log2 means you raise 2 to the power of that value. Then, just multiply one by the other.

There's your answer. For my µSD card media, it's 20K or 20,480 bytes.

In the beginning of the week, I had the chainsaw chain soaking in rapeseed oil (what I had handy in the kitchen). Since then, I hung it up to drip off excess oil back into the bowl.

I've since reassembled the chainsaw, adjusted the chain tension, and decanted the oil back into the container for next time.

But, yeah, need nice weather. Just kind of glad I squeezed in a cut of the grass a couple of weeks ago. It... needs another cut. Pffft.

There's linguine (flatter pasta with rounded edges), mafaldine (long strips with pleasingly ruffled edges), farfalle (the bow ties), and papiri. You can see the papiri uses a different method and/or wheat (look at the colour) which gives a firmer texture and rougher feel. There's no specific science behind what was measured. I just grabbed a random amount of each.

If you use decent pasta (I use Barilla), then the pasta itself has a flavour so it isn't necessary to drown it in some red muck that isn't sure if it's a vegetable or a fruit, with bits of inedible skin and equally inedible so-called pieces of beef.

Anyway, given my feelings on pasta sauce and that it's all about the texture (tactile stimuli for the mouth, if you prefer), here's how I serve it.

It was prepared in the multicooker on the boil programme for 20 minutes. While that's at least twice as long as pasta would normally take, it's a cooler cycle than a rolling boil and an enclosed container. The farfalle is a little overcooked, the papiri is perfect. Again, textures.

Seriously, that's all it needs.

So... everything he says in those exact words.

File space consumption

There was an interesting discussion on the forum regarding file space consumption. That is to say, if you save a file to disc, how much space the file actually uses, as opposed to how big the file it.

The second is the ID length, measured in bits. This is how many bits in the map represent a fragment, that is to say, usually a file.

Multiply the number of bits used (20) by the number of bytes per bit (the LFAU) and you have the size of the smallest individual quantity on the disc, the granularity of file sizes.

Files with 20K granularity.

For simplicity we'll ignore files sharing a fragment (as it only happens in specific circumstances) and fragmented files. The reality is much more complex than the diagram, but the basic priciples are correct.

This causes the following changes, which is basically doubling the amount of space required to store exactly the same files.

Files with 40K granularity (the last block is unused).

Anyway, we can see there's a definite pattern here. Every time the drive size doubles, the number of bytes per map bit doubles. So a 32GB device will have a minimum size of 80K.

Files with 1¼M granularity (the last two blocks are unused).Boot block - Boot Record

Number of Tracks : 29457

Number of Heads : 17

Sectors per track : 31

Sector size : 512

Density : hard disc

ID Length : 19

Bytes per map bit (LFAU) : 1024

Minimum object size : 20.0K

Yes, it's only 7.4GB. For various bullshit reasons, storage media is counted in units of 1,000 not 1,024.

>DIM b% 64

>SYS "SDFS_DescribeDisc", ":0", b%

:0 on the SDFS filing system into the block pointed to by b%.

For other media, you'll need to change the SWI to refer to the correct filing system and drive identifier. For example:

SYS "SCSIFS_DescribeDisc", ":0", b%

or:

SYS "ADFS_DescribeDisc", ":4", b%

etc, etc.

Note that the correct SWI name for SCSI is SCSIFS, not SCSI even though Filer windows say "SCSI::" and you use *SCSI to select SCSIFS. That's... just how it is.

>PRINT ( ( b%?4 + 1 ) * ( 2 ^ b%?5 ) )

20480

>

Chainsaw

Since storm... what was this one called? Larissa? Anyway, since it passed through yesterday, today is grey and rainy so I won't be out slicing and dicing tree pieces.

I did turn some ground, last year's potato patch (by the stream) in order to sow grass seed. But since the rotovator is broken, I did it by hand. What a pain in the back. Literally.

The starter cord of the rotovator has had it. I've ordered a replacement from Amazon, that'll be here sometime...

Brings a whole new meaning to "drip dry".

The idea isn't to use cooking oil to replace the regular chain oil, it's simply to ensure that there is some sort of oil in all of the many joints. I chose colza oil because it's runny so can impregnate, and because it can cope with heat (like chip pans) so ought to handle whatever temperature changes the chain might ensure in hacking apart wood.

Food texture

Maybe I'm a little odd, but for me, food texture is one of the most appealing parts of a meal. This shows up when I make pasta. I don't simply chuck some spaghetti into a pan and boil it awhile.

No, I start with this.

Pasta waiting to be cooked.

As you can imagine, I'm not a fan of bolognese/bolognaise. Especially given as most people outside of Italy use it wrongly (it's for flat pasta like tagliatelle, not rounded pasta like spaghetti...and yes, it makes a difference). Double burn-in-hell points if the sauce is piled on top, not mixed in.

Okay, let's just take a quick diversion here. If you're going to dump a sauce onto your pasta, it helps to match the sauce to the pasta in question.

Long thin pasta (like spaghetti) work better with lighter cream or oil based sauces. Carbonara might be an option if you make it properly. Most carbonara I've eaten would stick to a spoon if you turned it upside down. That's far too thick.

More substantial long flat pasta (tagliatelle, mafaldine, etc) work well with a thicker sauce, like how carbonara usually ends up, or bolognese. Various chunky tomato sauces work as well, such as the basil one.

Twisted pasta (fusilli, etc) have lots of nooks and crannies which are great at holding sauces with fine bits, such as a pesto. Not all twisted pasta has the same size curvy bits, so consider the size of the bits in the sauce when matching to the pasta.

And, finally, tubular pasta (penne, etc) have big voids inside that are good at holding chunky bits. You can either use a rough-chopped (or homemade) vegetable sauce, or if the pasta is large enough like calleloni, it can be stuffed and baked.

Special dispensation for Americans. Where would we be without Mac&Cheese?

Finally, if you put a sauce on stuffed pasta (like ravioli), you're an idiot. They have a flavourful filling, it's that you should be tasting so just gently drizzle some olive oil on top. If you must sex it up a little, quickly fry a large lump of butter with a chopped herb (like basil or sage) and use that as the 'sauce'.

Pasta waiting to be eaten.

It's gently tossed in butter (not marge), and dusted with ground black pepper for some extra zing.

Lineker, Nazis, and the BBC

I was going to write something. I thought about how to approach this topic. And then I noticed Jonathan Pie posted a new video.

Anon, 11th March 2023, 19:41

I thought they'd changed the file system in RO4 so it used fixed 4K blocks?

For an example of one way it should be done, look at the way NTFS handles small files. It can pack multiple small files into a single file system block, where the total is less than 4K. It also supports 'sparse' files.

For those who aren't familiar with sparse files:

https://en.wikipedia.org/wiki/Sparse_file

I know we used to bash Windoze back in the 90s (and fair enough, it was crap back then) but it's come on a long way. 25 years of development and it's actually pretty stable. Although there are certain things I still miss occasionally about the "RISC OS way" of doing stuff.Rick, 12th March 2023, 11:03

NTFS : 8GB & 16GB & 512GB = 4K FAT16: 8GB = 128K, 16GB = 256K, 512GB not supported FAT32: 8GB = 4K, 16GB = 8K, 512GB = 32K (but technically not supported under Windows) exFAT: 8GB & 16GB = 32K, 512GB = 128K

So for my 8GB USB stick, exFAT is worse (nobody would seriously use FAT16), but in all other cases it's much MUCH better than FileCore.

That NTFS can happily handle 4K blocks on a big disc while FileCore spaffs a megabyte and a quarter on Every Single Freaking File is a sick joke, really.

Floppy based filing systems Do Not Scale!

(source: Microsoft tech documents)Anon, 12th March 2023, 20:40

I particularly like the way (mentioned briefly above) it handles tiny files. If you've got, say, two files that are about 1,500 bytes each, NTFS will 'pack' them into a single 4K block.

That's 1,500 bytes stored on the disk - so if you have NTFS compression turned on (which works very well) then it'll reduce 'wasted' space even more.

Speaking of NTFS compression, if you have a reasonably fast PC running a newer version of Windows (this works on Win10 and Win11, possibly on Win8, not sure about Win7), try this from an administrator command prompt:

cd "c:\Program Files"

compact /c /s /i /f /exe:lzx

(Repeat for the "Program Files (x86)" directory on 64-bit systems.)

If you haven't got Bitlocker running on your boot drive then you can also do this:

compact /compactos:always

(If you do have Bitlocker enabled then you can still use the method detailed above for the Windows directory.)

The help text of the 'compact' command (that's 'compact' as in compress, not as in the old 'compact' command on ADFS or DFS!) states that the /exe option "uses compression optimised for executable files which are read frequently and not modified". The LZX algorithm is the best compression, but it does take some time to compress all the executables. Worth doing though as it saves several gigs of disk space.J.G.Harston, 15th March 2023, 23:19 druck, 1st June 2023, 01:35

| © 2023 Rick Murray |

This web page is licenced for your personal, private, non-commercial use only. No automated processing by advertising systems is permitted. RIPA notice: No consent is given for interception of page transmission. |